After our previous post that broke down the process of setting up and deploying to Samsung’s GearVR, we took on the challenge of converting one of our in-house DK2 demos to work with it. It wasn’t simply a matter of plug-and-play with the Unity game engine; in fact, we had to completely re-think the way in which we’ve optimized 3D geometry in the past due to the differences between the PC and mobile platforms, such as the importance of draw calls. We also had to pay close attention to polycount because of the relatively low limit on mobile hardware. Last but not least, one of the key factors of a good VR demo is an intuitive input method, so we experimented with the Gear’s touchpad until we found a solid solution. The model we worked with was a simple solar-powered house design that was originally modeled in Revit and then brought into 3DS Max.

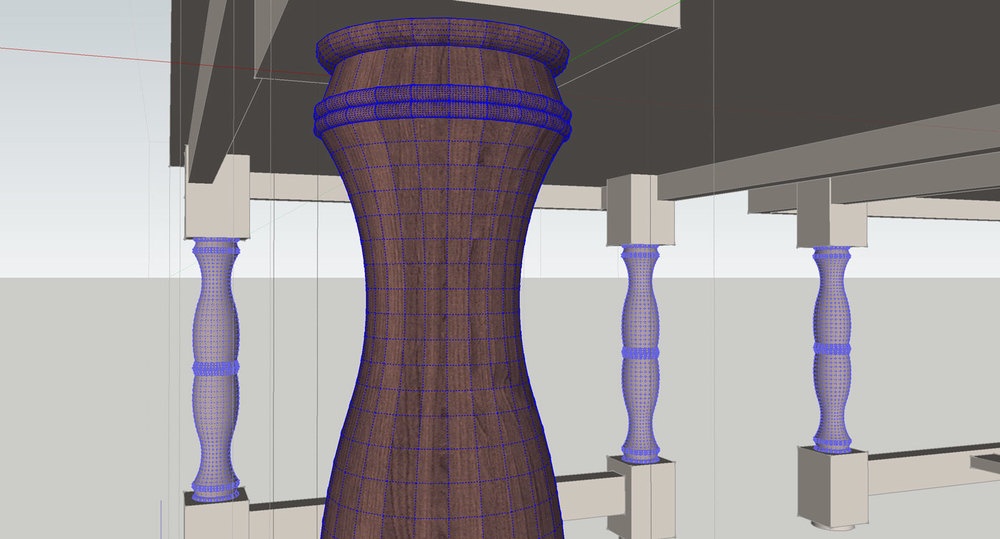

If you're working on SketchUp files that will be shown in VR, it can be helpful to keep an eye on polygon count. Here's a quick post on how to find it.

At Iris, we are always excited to try out new toys and share what we discover. With the release of Samsung’s GearVR at the height of the holiday season, Jack and Amr couldn’t think of a better time to dive into mobile VR.

Update: you can learn more about our SketchUp workflow in this blog post.

As more companies get their hands on Oculus Rift development kits, VR demo stations are making appearances at gaming conventions, architecture and construction trade shows, and technology conferences. Giving comfortable, quick, non-nauseating VR demos is new territory, so we outlined our own trade show rig. Let us know what you think! Do you prefer a different set up? Start the conversation below.

IrisVR just got back from a week in Las Vegas exhibiting at Autodesk University 2014. It was a wonderful conference this year; 9500 well-fed attendees sat in classes ranging from BIM implementation to CGI breakdowns. Below is my recap of conference highlights and a short discussion around some of the exciting tools coming down the pike for VR and ArchViz.

On September 12th, three members of the IrisVR team made a trip out to Seattle for the AEC Hackathon. The event brought industry experts and tech enthusiasts from all over the country to solve a challenge related to Architecture, Engineering, or Construction. There were 3D printers, laser scanners, a full motion virtual reality environment, drones, and more. Of course, we brought an Oculus Rift.

Our team was made up of Nate, Jack, and myself, Greg. The only instruction we received was to use technology to solve a ‘pain point’ in the industry. We’re mostly programmers without much direct AEC experience, so we started out the morning by asking around to identify some pain points. Before long, we ran into Dan Shirkey and Keith Walsh from Balfour Beatty Construction. Dan is the Technology Center of Excellence Leader for the West Region of Balfour Beatty Construction, and his primary focus is to capture and grow the best cases of technology use and to bring new technology and innovation to Balfour Beatty Construction. Dan and Keith identified workplace safety as a top priority, and together we came up with a plan to use technology to improve safety training. Before long, we had our team - Hazyard.

The Oculus Rift engages your head, but what engages your body? The trouble with such an immersive VR headset is that conventional input methods seem to fall flat. Who wants their eyes exploring virtual reality while your hands stay behind in the real world, tethered to the mouse and keyboard? It’s like going on a safari where you’re not allowed to leave the Jeep. In other words, no fun. At Iris, we continue to iterate through input paradigms until we find the perfect way to navigate a 3D space without assuming prior experience with 3D navigation, like video games. It’s been a challenge to find a device that is simple to use but complex enough to integrate into the full functionality of our software; however, we may have found a happy medium in the Myo armband by Thalmic Labs. The Myo fits around your lower arm just below your elbow, and uses electrical sensors to detect what your muscles are doing (and, by extension, what your fingers are doing). Check out a demonstration here.

Update: we've built tons of features into Prospect that can help you better navigate through virtual reality, including Viewpoints. Read more about Viewpoints here.

My name is Greg Krathwohl. I graduated from Middlebury College this spring, majoring in Computer Science and Economics. Before coming north to Vermont, I grew up in Ipswich, Massachusetts. I enjoy coding, running, adventuring, and making maps. Every day at IrisVR, I’m learning more about architectural modeling and 3D graphics, but my first interest in stereoscopic 3D started about 10 years ago, when first discovered the Magic Eye books. I quickly mastered the technique of diverging my eyes to see the magical 3D image, and began to experiment with how they worked, creating my own little scenes in Microsoft Paint. Since learning about how stereo vision works, I started taking 3D pictures - a left image, and a right one a few inches away. To see the full effect, put the images next to each other and diverge your eyes in the same way that you view a Magic Eye. I was anticipating the day when we had the technology to revisit these scenes without this headache inducing technique. I was first introduced to programming at Middlebury. I was fascinated by how coding could create anything. I learned how computer vision could be used to identify edges in a image, or find shapes, or pick out objects. Or, most amazingly, how multiple views of an object could be used to recreate its 3D geometry. I spent last summer assisting research for Professor Scharstein, known in the world of stereo vision for his stereo vision benchmarks. We worked on capturing scenes (random objects placed on a table) to create high resolution depth maps.

.png?width=212&name=Prospect%20by%20IrisVR%20Black%20(1).png)