Based in Houston, Texas, the Engineers Without Borders-Johnson Space Center Chapter (EWB-JSC) is designing and implementing a water and sewer system and biogas digester installation at the Giika Orphan Rescue Center site in Giika, Kenya. The rescue center is home for about 30 teenage boys who were previously homeless due to their families not having the resources to support them. The EWB chapter was founded in 2005 and is one of the oldest in the organization which itself goes back to 2002.

To develop the biogas digester system EWB-JSC partnered with the JSC Sustainability Office and the Longhorn Project, a Houston area vocational agricultural non-profit. Each group is using the project to explore a different application: EWB-JSC is interested in the technology for its work in Kenya, the Longhorn Project for local education, and NASA for potential applications on Mars. In Spring 2016 NASA awarded an innovation grant to the Sustainability Office to further the partnership’s mutually beneficial work. EWB-JSC provides the design engineering while the Longhorn Project provides the cattle and facilities. EWB-JSC designs both the Kenyan digester array and the space analog digester for Mars mission design.

EWB-JSC during their first assessment trip to Kenya in September 2015.

EWB-JSC during their first assessment trip to Kenya in September 2015.

VR helps us to fully understand our design and to evolve it. It’s one thing to see it on screen; it’s another to ‘stand’ next to it.

Designing with Virtual Reality

The Team at IrisVR spoke with James (Jake) Mireles, EWB-JSC Chapter President to learn more about the design and the role virtual reality was playing in EWB-JSC’s work at the Giika Orphan Rescue Center.

Q: Could you explain the role of a biogas digester?

A biogas digester takes cow or other animal manure and mixes it into a slurry that is kept in a closed container at a consistent temperature—about the temperature of a cow intestine. Keeping the mixture in a closed container enables the anaerobic bacteria present to continue digesting the manure over time. The digestion process generates methane gas and fertilizer effluent. Biogas digesters are common in third world countries where the methane they generate is used for cooking and the liquid effluent is used to help fertilize crops. NASA is interested in methane production for cooking and also as a rocket propellant and in the effluent for fertilizer.

For example, in the picture above, there is a small farmyard behind the building which will supply manure to the digester which is located downhill. The resulting methane gas will be piped back uphill to the buildings for fuel and the effluent will be sent further downhill to crops and a fish farm.

Q: What was it like to integrate virtual reality into the project?

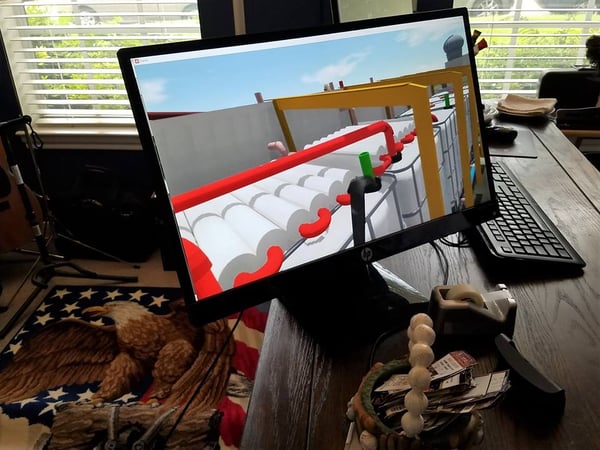

We built a 3D CAD model entirely in SketchUp and then used IrisVR Prospect to render the model in VR using the HTC Vive. We then used Prospect to study the model in the VR environment for design review, which allows us to more fully evaluate, then refine the design.

We went from “wow to workflow”—from a simple show-and-tell to a full-fledged design review—very quickly, with team members suggesting improvements based on their view in the VR environment. IrisVR enabled our team to fully immerse in the design and get a sense of the scale of the array as well as its technical operation. We figured out many improvements that would not have been discoverable without VR.

Q: How did you use virtual reality to communicate the vision for the project?

Outside of our team, we presented the design twice to the Johnson Space Center community. The first presentation was at our September [EWB-JSC] Chapter meeting and the second at an Innovation Days event held in early November 2016.

We had 260 visitors stop by the Innovation Days event, including senior Johnson Space Center senior management. Our presentation received the 2nd place “People’s Choice” award out of 36 presentations on various technological innovations.

Q: How has the engagement and reaction been in comparison to other meetings without VR?

The proof of its impact on our clients was the recognition we received at the November Johnson Space Center presentation. We have been invited to make a presentation to Johnson Space Center senior staff in January 2017, directly as a result of using IrisVR.

“When I set up the VR walkthrough, I thought we would spend maybe 15 minutes to half an hour total. After about 15 minutes the team moved past “wow” and started working on the design. The session turned into a full-blown design review lasting two hours.”

SketchUp 3D model inside IrisVR Prospect during walkthrough.

Workflow & VR Hardware Setup

The EWB-JSC team created a virtual reality experience of their project by modeling all the components in SketchUp, then dragging and dropping their file into IrisVR Prospect and using the HTC Vive headset to explore. Here’s how they did it:

First, create your content: The model was built entirely in SketchUp in two parts - one was the digester design and other was the environment which included the campus, buildings, and terrain. The refined integrated model was then imported into Prospect.

Second, setup your VR headset and station: The team uses the HTC Vive to create a room-scale VR setup using camera light tripods to place the trackers. The complete rig is mobile and transports in a rolling hard shell toolbox purchased at a hardware store.

“Room-scale VR is far superior and Prospect’s “teleportation” technique ensured that no one experienced simulation sickness. The team worked in the VR for two hours with no ill effects felt by anyone.”

Image Above: Chapter President James (Jake) Mireles sharing design in VR with participant. Provided by the NASA-JSC Imagery Online Database, NASA Photographer: Bill Stafford.

Image Above: Chapter President James (Jake) Mireles sharing design in VR with participant. Provided by the NASA-JSC Imagery Online Database, NASA Photographer: Bill Stafford.

What's next for the team?

Keep up with the developments of the Giika Orphan Rescue Center and the teams work by following their Facebook group. More recently they won the People's Choice Runner Up award at the 2016 EISD Technology Showcase at NASA's Johnson Space Center.

Adding Virtual Reality to Your Workflow

Virtual reality is not a tool of the future, but a tool available to designers today. Incorporating VR as an asset to your project really is just as simple as seen in Miele's workflow above. All that you need to get started are four components:

1. 3D Model of your design

2. VR Headset (HTC Vive or Oculus Rift)

3. Computer that meets hardware requirements for VR

4. IrisVR Prospect installed on your machine

.png?width=212&name=Prospect%20by%20IrisVR%20Black%20(1).png)